Anthropic decoded the vectors Claude uses to represent abstract concepts

"Feature vectors" can represent anything from racism to the Golden Gate Bridge.

Last week, Anthropic announced a significant breakthrough in our understanding of how large language models work. The research focused on Claude 3 Sonnet, the mid-sized version of Anthropic’s latest frontier model (Sonnet is larger than Haiku but smaller than Opus). Anthropic showed that it could transform Claude’s otherwise inscrutable numeric representation of words into a combination of “features,” many of which can be understood by human beings.

If you’ve read my explainer on large language models, you know they represent words (technically parts of words called tokens) as lists of thousands of numbers called vectors. Just as longitude and latitude uniquely identify a point on the two-dimensional surface of the earth, so a word vector locates a word in an imaginary “word space” with thousands of dimensions.

Representing words this way allows language models to reason about them mathematically. Related words (like “cat” and “dog”) have similar numerical representations and hence are close together in word space.

You can do arithmetic with word vectors. For example, if you subtract the vector for France from the vector for Paris, you get a new vector that maps any country to its capital. Calculate Paris minus France plus Italy and you get a vector for Rome. Compute Paris minus France plus Japan and you get a vector for Tokyo.

A paper last year demonstrated that GPT-2 uses this exact mechanism to answer questions about national capitals. But uncovering relationships like this has been a slow and painstaking process. There’s still a lot we don’t know about how and why LLMs represent words the way they do.

Anthropic’s new research sheds fresh light on this important question by demonstrating that the vectors Claude uses to represent words can be understood as the sum of “features”—vectors that represent a variety of abstract concepts from immunology to coding errors to the Golden Gate Bridge. They also show that if you modify Claude to artificially boost one of these features, it alters Claude’s behavior in the way you might expect.

For the first 24 hours after releasing its research, Anthropic let people experiment with a hilarious variant of Claude called Golden Gate Claude. Anthropic modified the regular Claude chatbot by adding a “Golden Gate Bridge” feature vector to Claude’s internal representation for every word. The result was a model that couldn’t shut up about the Golden Gate Bridge. No matter what question the user asks, the chatbot would work the Golden Gate Bridge into its response.

Golden Gate Claude was silly, but this research could prove useful for Anthropic and the broader industry. The Anthropic team identified features associated with deception, the creation of bombs and bioweapons, racism, and other undesirable behaviors. Anthropic’s research could lead to new tools to detect model misbehavior—or perhaps even to prevent it altogether.

A notable thing about this research is that Anthropic did it on a large, modern frontier model. A lot of earlier interpretability research has focused on models like GPT-2 that are too small to have real-world applications. In contrast, Claude 3 Sonnet is a GPT-3.5-class model that people are using in real applications.

Large language models: a quick refresher

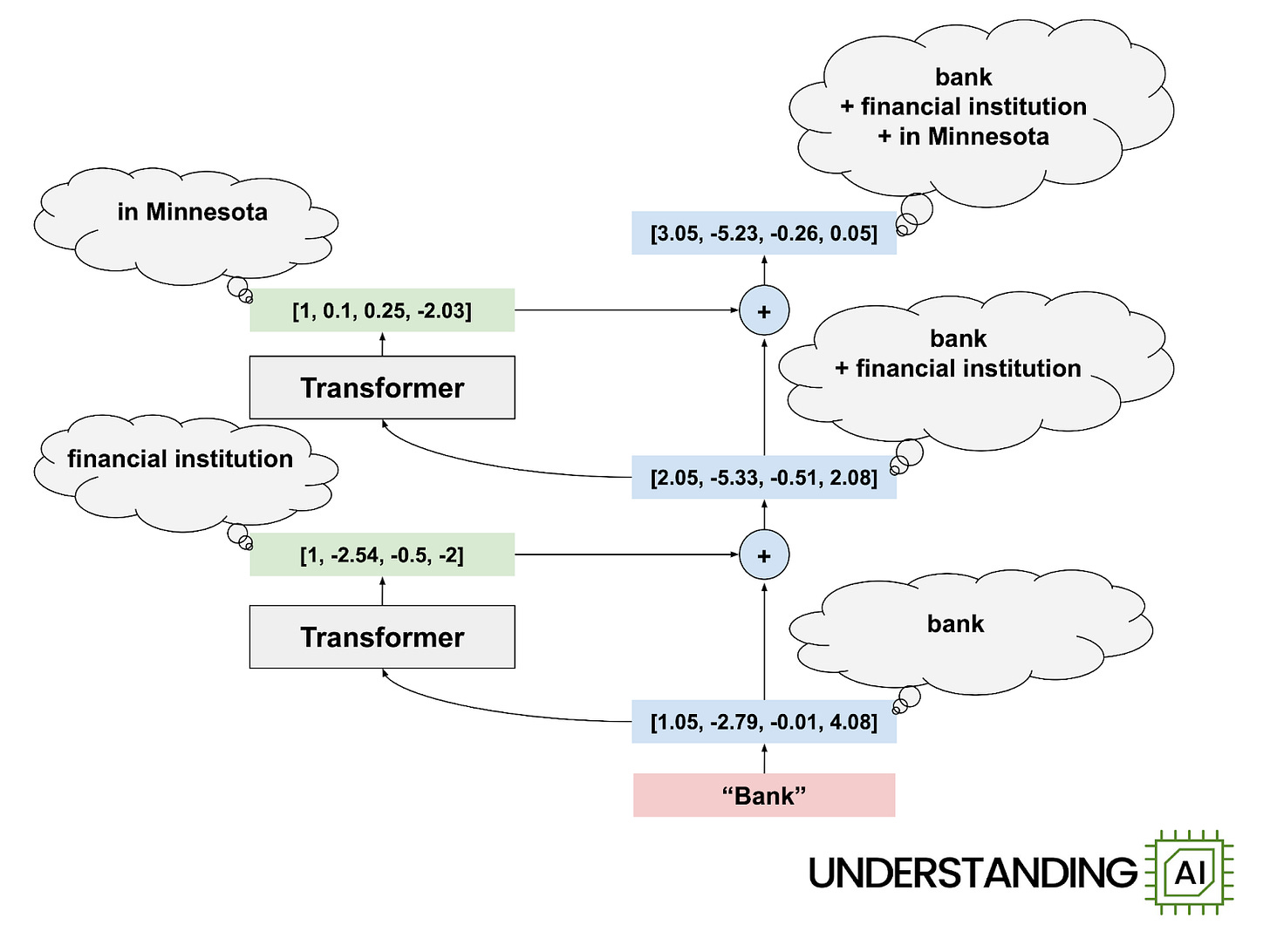

To understand Anthropic’s research, we need to review a bit of the background from last year’s explainer on LLMs. The basic building block of LLMs is the transformer, a neural network architecture invented by Google researchers in 2017. A modern LLM is a stack of dozens of transformers, with the output from one transformer becoming the input for the next one. Here’s a stylized diagram:

My LLM explainer last year had a diagram showing each transformer’s output being fed directly into the next transformer. But you can also envision it as each transformer adding (or subtracting) values from a running total called the “residual stream”: the blue boxes on the right side of the diagram above. Each blue box represents the model’s updated understanding of the current input word. That understanding becomes more nuanced at each layer of the model, which helps to predict the next word upon reaching the top layer.

Keep reading with a 7-day free trial

Subscribe to Understanding AI to keep reading this post and get 7 days of free access to the full post archives.