Computer-use agents seem like a dead end

OpenAI's Operator was the best model I tried. But that's not saying much.

It’s Agent Week at Understanding AI! Today’s post is only for paying subscribers. I’m offering a 20 percent discount on annual subscriptions through the end of the week, but only if you click this link.

Some people envision a future where sophisticated AI agents act as “drop-in remote workers.” Last October, Anthropic took a step in this direction when it introduced a computer-use agent that controls a personal computer with a keyboard and mouse.

OpenAI followed suit in January, introducing its own computer-use agent called Operator. Originally based on GPT-4o, OpenAI upgraded it to o3 in May.

A Chinese startup called Manus introduced its own buzzy computer use agent in March.

These agents reflect the two big trends I’ve written about this week:

They were trained using reinforcement learning to perform complex, multi-step tasks.

They use tools to “click,” “scroll,” and “type” as they navigate a website.

I spent time with all three agents over the last week, and right now none of them are ready for real-world uses. It’s not even close. They are slow, clunky, and make a lot of mistakes.

But even after those flaws get fixed—and they will—I don’t expect AI agents like these to take off. The main reason is that I don’t think accessing a website with a computer use agent will significantly improve the user’s experience. Quite the contrary, I expect users will find computer-use agents to be a confusing and clumsy interface with no obvious benefits.

Putting computer-use agents to the test

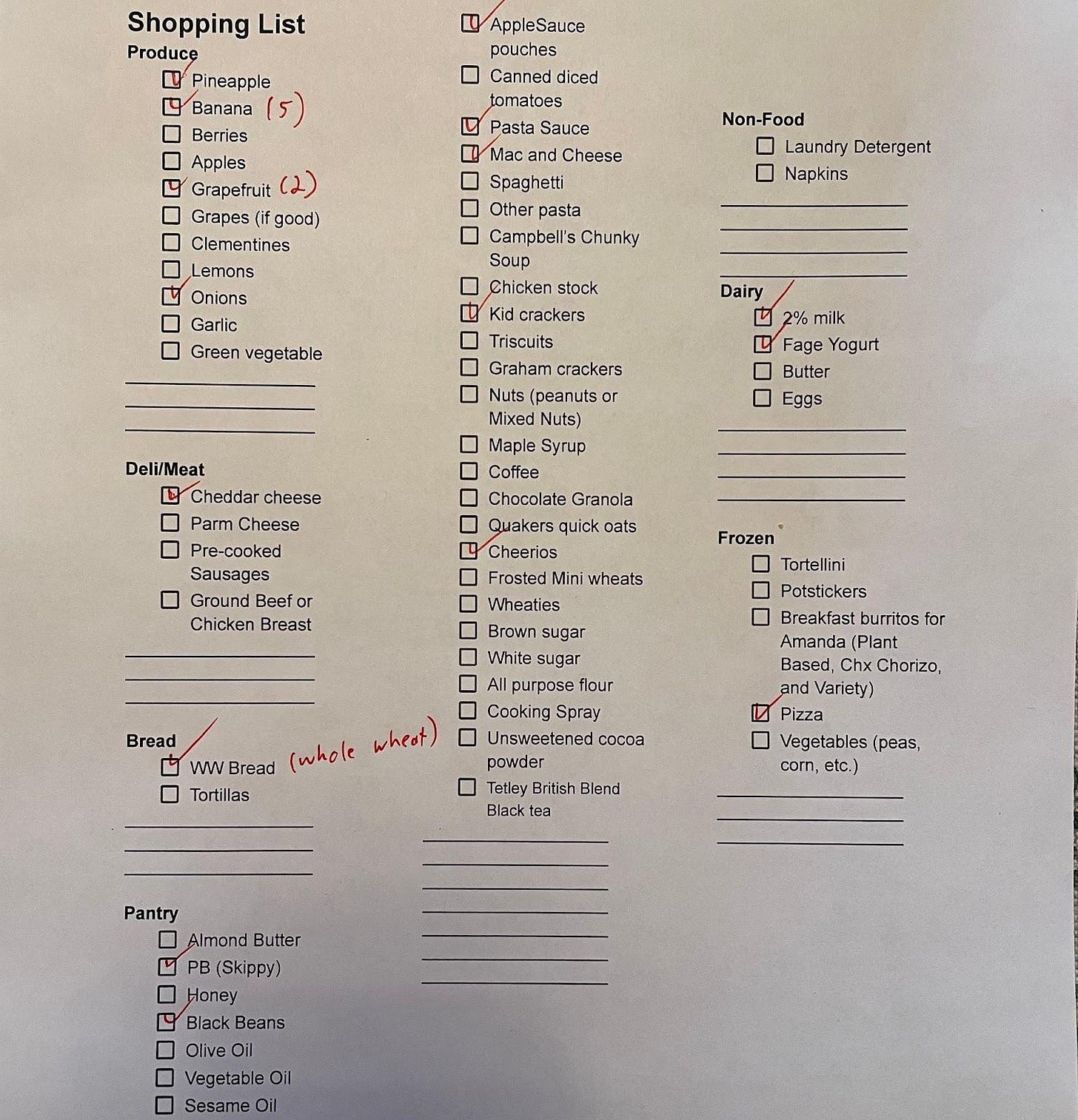

In the launch video for Operator, an OpenAI employee shows the agent a hand-written, five-item shopping list and asks it to place an online grocery order. I wanted to try this out for myself. But to make things more interesting, I gave Operator a more realistic shopping list—one with 16 items instead of just five.

This image is based on a template I use for personal shopping trips. When I gave it Operator, the results weren’t great:

When I asked it to list items that had checkmarks, it included several unchecked items (lemons, almond butter, honey, vegetable oil, and olive oil). It misread the “(5)” after bananas as “15.” And it failed to include Cheerios on the list.

After asking it to correct those mistakes, I had Operator pull up the website for the Harris Teeter grocery store nearest to my house. Operator did this perfectly.

Finally I had Operator put my items into an online shopping cart. It added 13 out of 16 items but forgot to include pineapple, onions, and pasta sauce.

Operator struggled even more during a second attempt. It got logged out partway through the shopping process. After I logged the agent back in, it got more and more confused. It started insisting—incorrectly—that some items were not available for delivery and would need to be picked up at the store. Ultimately, it took more than 30 minutes—and several interventions from me—for Operator to fill my shopping cart.

For comparison, it took me less than four minutes to order the same 16 items without help from AI.

The experience was bad enough that I can’t imagine any normal consumer using Operator for grocery shopping. And two other computer use agents did even worse.

Keep reading with a 7-day free trial

Subscribe to Understanding AI to keep reading this post and get 7 days of free access to the full post archives.