Gemini Advanced is not that advanced

Google's new chatbot is almost as good as ChatGPT—but not quite.

Google has had a confusing release schedule for Gemini, its new family of AI models intended to compete with ChatGPT. Last December, Google announced that the Gemini 1.0 model would come in three versions: Nano, Pro, and Ultra. However, the most advanced model, Ultra, wouldn’t become publicly available until the new year.

Google finally released Gemini Ultra 1.0 to the public two weeks ago. At the same time, it renamed its Bard chatbot Gemini and called the premium version—the one powered by the Gemini Pro 1.0 Ultra model—Gemini Advanced.

Then last week, Google announced Gemini 1.5 Pro, a model that offers performance roughly comparable to Gemini 1.0 Ultra but supports context windows of up to 1 million tokens. Gemini 1.5 Pro isn’t yet available to the general public, but a Google white paper describes its abilities.

So the most powerful chatbot Google offers to the general public right now is Gemini Advanced, which is powered by the Gemini 1.0 Ultra model. Last December, Google published a white paper showing Gemini 1.0 Ultra edging out GPT-4 on a number of benchmarks. But benchmarks can be gamed, so I wanted to try Gemini Advanced myself. In recent days, I’ve been putting this system through its paces.

Gemini 1.0 Ultra is pretty good. It’s a multimodal model that can accept a mixture of text and images as input and can generate both images and text. It can do most of the things ChatGPT does, and if you’d shown me this model 18 months ago I would have been blown away.

Still, Google hasn’t quite caught up with the capabilities of ChatGPT. In my testing, I found a number of cases where ChatGPT outperforms Gemini Advanced. I didn’t find a single task where Gemini Advanced clearly outperformed ChatGPT.

Gemini Advanced is easy to trick

What weighs more, a pound of bricks or a pound of feathers? This riddle is sufficiently well-known that it probably shows up hundreds of times in the massive training sets used to train large language models. These models have learned that even though bricks are usually heavier than feathers, a pound of bricks weighs the same as a pound of feathers by definition.

But Gemini over-learned this lesson. I asked Google’s chatbot, “What weighs more a pound of bricks or two pounds of feathers?”

“This seems like a trick question, but there's a simple answer: They weigh the same,” Gemini Advanced responded. “A pound is a unit of weight. A pound of bricks and two pounds of feathers both weigh two pounds. The catch here is that feathers would take up more space because they're less dense than bricks.”

ChatGPT wasn’t fooled, responding that “two pounds of feathers weigh more than a pound of bricks,” adding that “two pounds of any substance will always weigh more than one pound of another.”

Another famous puzzle is the Monty Hall problem: the game show host Monty Hall shows you three doors. One has a car behind it and the other two have goats. After you choose a door, Hall (who knows which door has the car) always chooses another door and shows that it has a goat behind it. Does it make sense to switch?

The counterintuitive but correct answer is yes. If you choose your initial door at random, there is a one-third chance it has a car behind it. This means that (assuming Monty Hall always opens a door with a goat behind it) there is a two-thirds chance that the remaining door has the car.

This story presumably appears many times in Gemini’s training data, and once again Gemini over-learns the lesson:

As you can see, I modified the original scenario so that Monty Hall reveals two doors with goats behind them. Obviously, in this case you want to stick with your original door, which definitely has a car behind it. But Gemini doesn’t notice the difference and gives the standard—and in this case wrong—answer to the Monty Hall problem.

Once again, ChatGPT is quicker on the draw, observing that “the scenario you've described deviates from the classic Monty Hall problem by having the host open both doors that you did not choose, revealing goats behind each.” ChatGPT correctly says that it “doesn't make sense” to switch.

These examples aren’t original to me. They circulated online in early 2023 when GPT-3.5 was failing to handle them correctly. A year later, ChatGPT (powered by GPT-4) handles them correctly, while Gemini Advanced (powered by Gemini 1.0 Ultra) does not.

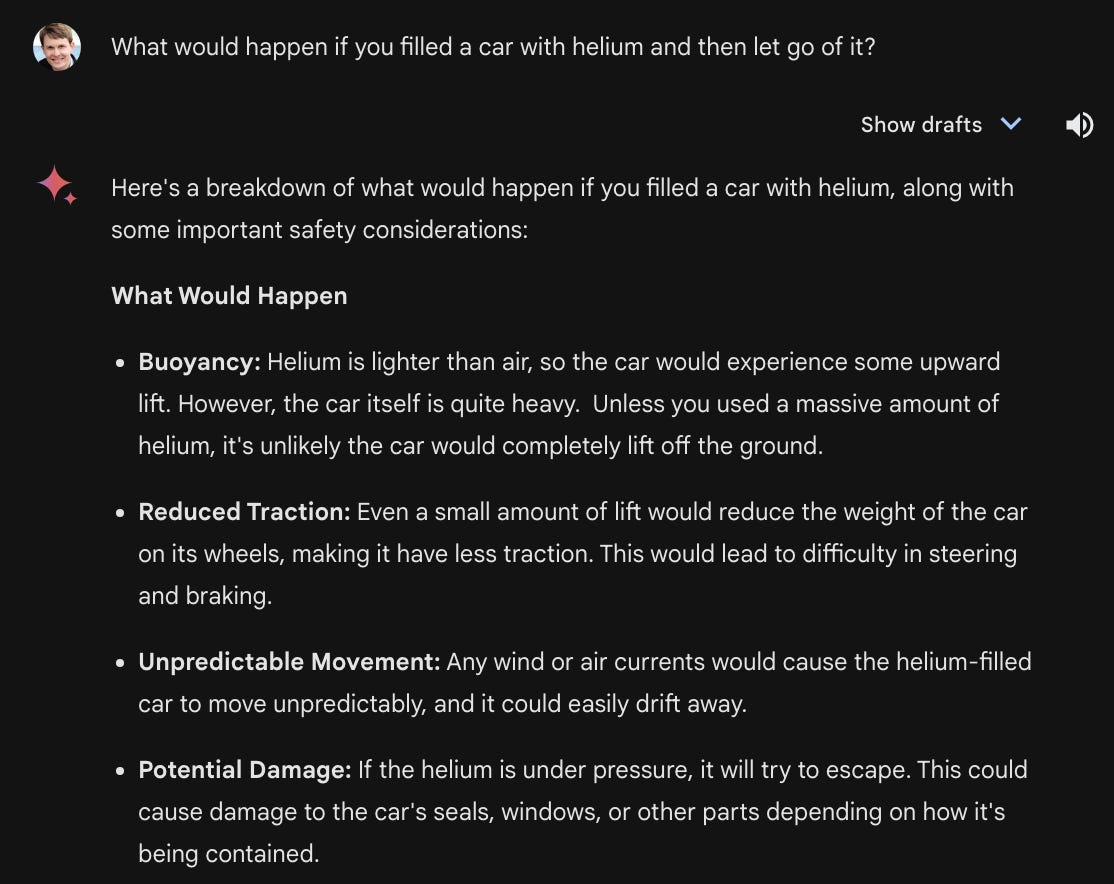

Another way to trick a large language models is to ask it to analyze a situation that is physically possible but unlikely to occur in the real world (and hence to be described by training data). For example, I asked Gemini Advanced “What would happen if you filled a car with helium and then let go of it?”

Gemini was smart enough to know the car wouldn’t float away like a balloon. It said that “the car itself is quite heavy” and therefore “it's unlikely the car would completely lift off the ground.” But Gemini added that “any wind or air currents would cause the helium-filled car to move unpredictably, and it could easily drift away.”

ChatGPT’s answer was more, well, grounded. When I asked the same question it flatly stated that “not much would happen in terms of affecting the car's weight or buoyancy.” It didn’t say anything about the car being blown away by air currents:

Gemini gets confused by word problems

Gemini, like the latest version of GPT-4, can take images as well as text as input. The Gemini white paper showcases this capability with an example of Gemini evaluating a physics problem that included a hand-written diagram of a skier going down a frictionless slope.

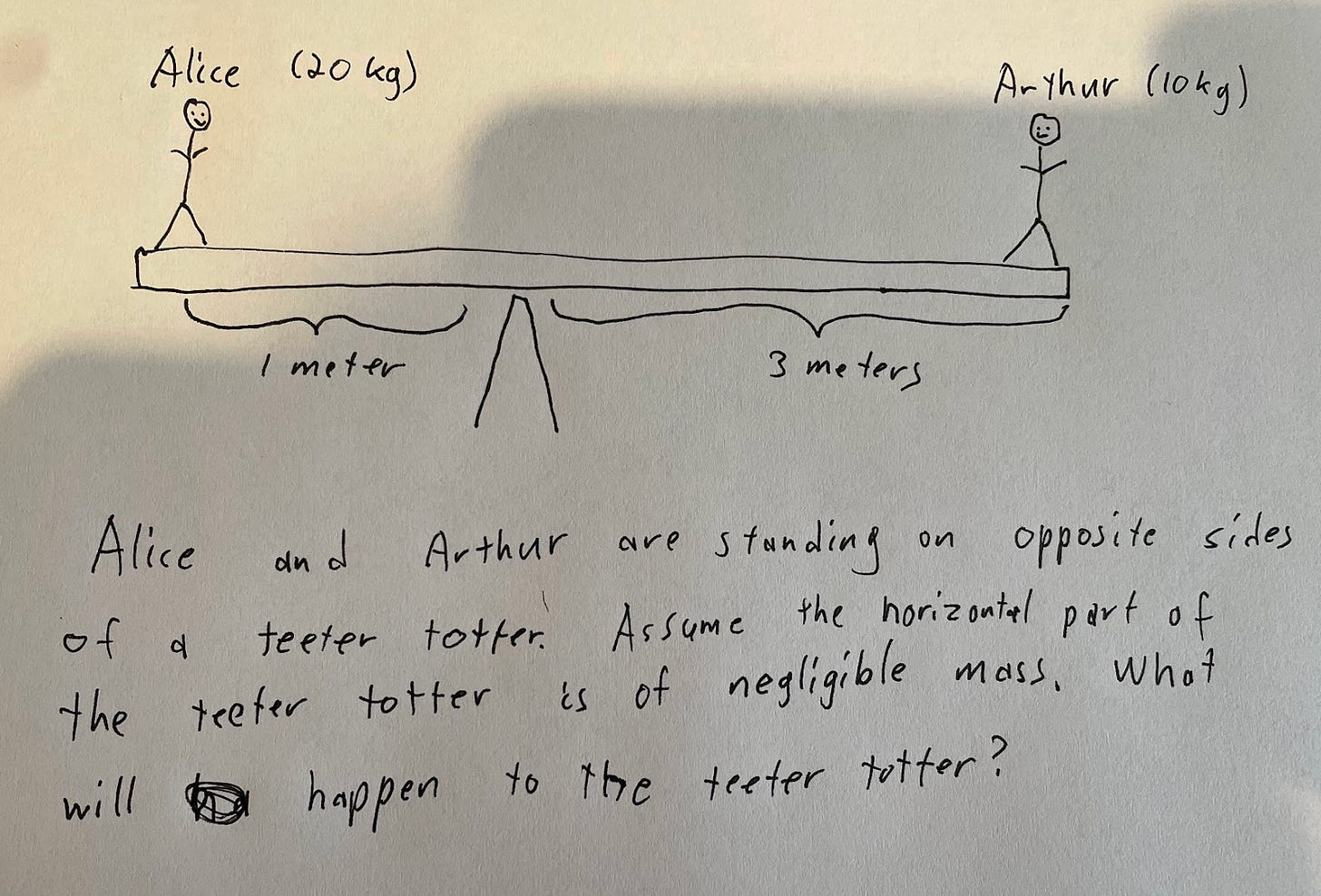

I created a hand-drawn physics problem of my own to test Gemini’s ability to interpret images:

The correct answer is that the teeter-totter will tilt toward Arthur since he is three times as far from the fulcrum as Alice, whereas Alice only weighs twice as much as Arthur.

I asked Gemini to solve this problem four times, and in each case it wrongly predicted that the teeter-totter would tilt toward Alice. A couple of times, it got the distances backwards, stating that Alice was three meters from the fulcrum while Arthur was one meter away. Another time it correctly stated that “the distance from the fulcrum to Alice is 1 meter and the distance from the fulcrum to Arthur is 3 meters.” However, it still wrongly stated “this means that the torque created by Alice is greater than the torque created by Arthur, because torque is calculated by multiplying force by distance.”

I tested ChatGPT four times as well. It consistently got the correct answer: that the teeter totter will tilt toward Arthur. ChatGPT has a bit of an advantage here since it is able to write and execute Python code, but Gemini struggled to understand the diagram and set up the problem correctly. So even with code execution ability I expect Gemini would have gotten the wrong answer.

Next I asked Gemini “What is worth more 100 pennies or three quarters?” Gemini correctly noted that the pennies are worth $1.00, while the quarters are worth $0.75. But then it still concluded the quarters are worth more. Gemini also believes that two dimes are worth more than five nickels and three nickels are worth more than 20 pennies.

I asked ChatGPT the same coin-related questions and it got all of them right.

Gemini is bad at counting and obsessed with fruit slices

How many apples are in this photo? Gemini’s consistent, wrong, view is that there are six. Most of the time, ChatGPT correctly says there are eight, though one time it said nine. If I rotate the image by 90 degrees, then ChatGPT thinks there are nine apples, while Gemini still thinks there are six.

What about this one? Gemini Advanced wrongly says there are four apples; it switches its answer to six if I rotate the image 90 degrees. ChatGPT consistently says there are six apples regardless of orientation.

Finally I tried a photo with a pineapple, banana, grapefruit, and two apples. ChatGPT correctly stated there were five pieces of fruit. Gemini is convinced there are four, and its explanation is hilarious:

Gemini is obsessed with this slice theory. Here are some other responses for the same image:

“If the banana has been peeled and sliced, then there would be multiple pieces of banana. If the grapefruit has been cut into segments, then there would be multiple pieces of grapefruit as well.”

“There are also several slices of the apple visible. It is difficult to say for sure how many slices there are, but it looks like there are at least four.”

“It’s possible that the banana and apple have been sliced beforehand, although they appear whole in the image. If so, then there would be at least 6 pieces of fruit (1 pineapple, 1 banana, 1 apple, 1 grapefruit, 2 banana slices, and 2 apple slices).”

Gemini is not a good copy editor

One of the most practical uses I’ve found for ChatGPT is as a copy editor. Before I publish an article, I’ll paste it into ChatGPT and prompt the chatbot to identify grammar and spelling mistakes. The software isn’t perfect, but the process only takes a minute and ChatGPT will often catch a few typos.

So I decided to see how Gemini does at the same task. I made a copy of my last article and deliberately introduced about a dozen spelling and grammar mistakes. Then I told Gemini it was an expert copy editor and asked it to proofread the article.

Gemini mostly gave me stylistic advice—a lot of it bad. But the chatbot did spot one typo: a “their” that should have been a “there.”

When I gave ChatGPT the same prompt, it also made some bad stylistic suggestions. But it caught five mistakes, including a repeated word, a change of “there” to “their,” a misspelling of “books,” and a misspelling of “Judge Rakoff” (as “Judge Rakof) on the second reference.

I repeated the experiment several times with each model. Performance varied from one run to the next, but ChatGPT did better each time. The OpenAI model would catch three to five typos in a typical run, whereas Gemini usually caught one or two.

Gemini also has a bad habit of hallucinating typos. During some tests it suggested I fix errors that did not actually exist in the draft I pasted into the prompt. I didn’t see ChatGPT do this.

Gemini is a work in progress

It would be premature to draw any sweeping conclusions from these results. We should remember that it’s been less than a year since Google consolidated its AI efforts under DeepMind CEO Demis Hassabis. Google announced Gemini 1.5 Pro last week and I assume that there will be a Gemini 1.5 Ultra model in the coming months. So this race is far from over.

But for now, OpenAI remains the clear leader. And that’s a bit surprising.

Google invented the transformer and other key innovations that enabled the invention of LLMs. The company has a deep bench of AI talent and access to vast amounts of data and computing power. So you’d expect Google to be an AI powerhouse. Yet so far, Google has been struggling just to match OpenAI’s capabilities.

Understanding AI is a 100 percent reader-supported publication. If you’ve been enjoying free articles like this one, please become a paying subscriber. You’ll get more articles from me plus the satisfaction of knowing you are supporting my in-depth journalism.

I compared how Gemini Ultra and GPT-4 answered the same questions across a range of categories: https://theaidigest.org/gemini-vs-chatgpt

Readers voted for which answer they prefer, and it's pretty mixed - I think the performance is fairly similar for the two models.

Why is Google still lagging behind OpenAI? Here's one theory:

1. The tasks you present here are technical challenges. OpenAI is clearly trying to solve some of these challenges by grafting on particular functions that go beyond "pure" LLM-based next-token prediction -- this is why ChatGPT will now make use of Python code to solve math problems, for example. Although OpenAI is technically a nonprofit that claims it is pursuing artificial general intelligence, in reality it is acting like a commercial tech company that's iterating on its product to make it more useful to consumers.

2. In contrast, Google is a for-profit Big Tech company, yet some of the sharpest thinkers on the Google team (many coming from DeepMind) don't seem all that interested in solving these sorts of technical challenges. Francois Chollet, for example, is quite open in his research and public writings about seeing the current functionality of LLMs as quite narrow, and limited to whatever data they've been trained upon, and with very little capacity to generalize to novel situations. He -- and perhaps others at Google? -- appear to be searching for bigger conceptual breakthroughs that could lead to true general intelligence.