How transformer-based networks are improving self-driving software

The architecture behind LLMs is helping autonomous vehicles drive more smoothly.

It’s Autonomy Week! This is the third of five articles exploring the state of the self-driving industry.

As I climbed into the self-driving Prius for a demo ride, my host handed me a tablet. In the upper-left hand corner, it said “Ask Nuro Driver.” There was a big button at the bottom that said “What are you doing? Why?”

I pushed the button and the tablet responded: “I am stopped because I am yielding to a pedestrian who might cross my path.”

A few seconds later, I pushed it again: “I am accelerating because my path is clear.”

I was having a conversation with a self-driving car. Sort of.

Nuro used to be one of the hottest startups in the self-driving industry, raising $940 million in 2019, $500 million in 2020, and another $600 million in 2021. The company had big plans to deploy thousands of street-legal delivery robots.

But then Nuro had a crisis of confidence. It laid off almost half of its workers between November 2022 and May 2023. In a May 2023 blog post, Nuro’s founders announced they would delay Nuro’s next generation of delivery robots to conserve cash while they focused on research and development.

“Recent advancements in AI have increased our confidence and ability to reach true generalized and scaled autonomy faster,” the founders wrote. “Our focus now will be on making our autonomy stack even more data driven.”

One result of that shift: the tablet I held in my hands during last month’s demo ride.

It might seem silly to chat with a self-driving car, but it would be a mistake to dismiss this as just a gimmick. Borrowing techniques from large language models has made Nuro’s robots smoother, more confident drivers, according to Nuro chief operating officer Andrew Chapin.

And Nuro isn’t alone. In April, a British startup called Wayve announced LINGO-2, “the first language model to drive on public roads.” A video showed a vehicle driving through busy London streets while explaining its actions with English phrases like “reducing speed for the cyclist.” Investors were so impressed they invested $1 billion in Wayve in May.

Wayve portrays its more established competitors as dinosaurs wedded to obsolete technology. But the dinosaurs aren’t standing still. Some have started using the transformer, the architecture underlying LLMs, in their own autonomy stacks.

“We really leveraged that technology of transformers for behavioral prediction, for decision-making, for semantic understanding,” said Dmitri Dolgov, co-CEO of Google’s Waymo, in a February interview. Dolgov argued that Waymo’s self-driving software is “very nicely complementary with the world knowledge and the common sense that we get” from transformer-based language models, adding that “recently we’ve been doing work to combine the two.”

Indeed, since 2022, Waymo has published at least eight papers detailing its use of transformer-based networks for various aspects of the self-driving problem. These new networks have helped to make the Waymo Driver smoother and more confident on the road.

As impressive as these early results are, it’s not obvious that things will progress all the way to Wayve’s vision of a single LLM-like network driving our cars. More likely, self-driving systems in the future will use a mix of transformer-based networks and more traditional techniques. It’ll take a lot of trial and error to figure out the best combination of techniques to provide passengers with safe and comfortable rides.

Transformers: more than meets the eye

If you read my explainer on large language models last year, you know that LLMs are trained to predict the next token in a sequence of text. Although these models were initially text-only, researchers soon found that variants of the transformer architecture could be applied to a wide range of other domains, including images, audio files, and even sequences of amino acids.

They also found that transformer-based models can be multimodal. OpenAI’s GPT-4o, for example, can accept a mix of text, images, and audio. Under the hood, GPT-4o represents each image or snippet of audio as a sequence of tokens, which are then thrown into the same stream as the text tokens.

LLMs can also be trained to output new kinds of tokens. And researchers at Google found this ability was useful for robotics. Last year, Google DeepMind announced a vision-language-action model called RT-2. RT-2 takes in images from a robot’s cameras and textual commands from a user and outputs “action tokens” that give low-level instructions to the robot.

To accomplish this, researchers started with a conventional multimodal LLM trained on text and images harvested from the web. This model was fine-tuned using a database of robot actions. Each training example would include a high-level command (like “pick up the banana”) as well as a sequence of low-level robot actions (like “rotate second joint by 7 units, then rotate first joint by 9 units, then close gripper by 5 units”). Google created the database by having a human being execute each command using a video game controller hooked up to a robot arm.

The resulting model was not only capable of carrying out new tasks, it showed a remarkable ability to generalize across domains. For example, researchers placed several flags on a table along with a banana, then told the robot: “move banana to Germany.” RT-2 recognized the German flag and placed the banana on top of it.

The robotics training set used for fine-tuning probably didn’t have any examples involving German flags. However, the underlying model was trained on millions of images harvested from the web—some of which undoubtedly did have German flags. The model retained its understanding of flags as it was being fine-tuned to output robot commands, resulting in a model that understood both domains.

This line of research pointed to tantalizing possibilities for the self-driving industry. For example, self-driving software needs to recognize objects like fire trucks or stop signs. Traditionally, self-driving companies would build training sets manually, collecting thousands of examples of fire trucks and stop signs from driving footage, then labeling them by hand.

But what if that wasn’t necessary? What if it were possible to get comparable—or even better—performance by tweaking a pre-trained image model that wasn’t originally designed for self-driving? Not only would that save a lot of human labor, it might lead to models that can recognize a much wider range of objects.

And what about those action tokens RT-2 generated to control robots? Could a similar technique enable transformer-based foundation models to control self-driving vehicles directly?

Nobody is better positioned to answer these questions than Vincent Vanhouke, who led the Google robotics team that invented RT-2. In August, Vanhouke announced he was going to Waymo to explore how to use foundation models to develop “safer and smarter autonomous vehicles.”

Vanhoucke has only been at Waymo for a few weeks. But other Waymo researchers have been experimenting with transformers for several years—and in some cases, publishing their results in scientific papers.

Driving is a conversation

Any self-driving system needs an ability to predict the actions of other vehicles. For example, consider this driving scene I borrowed from a Waymo research paper:

Vehicle A wants to turn left, but it needs to do it without running into cars B or D. There are a number of plausible ways for this scene to unfold. Maybe B will slow down and let A turn. Maybe B will proceed, D will slow down, and A will squeeze in between them. Maybe A will wait for both vehicles to pass before making the turn. A’s actions depend on what B and D do, and C’s actions, in turn, depend on what A does.

If you are driving any of these four vehicles, you need to be able to predict where the other vehicles are likely to be one, two, and three seconds from now. Doing this is the job of the prediction module of a self-driving stack. Its goal is to output a series of predictions that look like this:

Researchers at Waymo and elsewhere struggled to model interactions like this in a realistic way. It’s not just that each individual vehicle is affected by a complex set of factors that are difficult to translate into computer code. Each vehicle’s actions depend on the actions of other vehicles. So as the number of cars increases, the computational complexity of the problem grows exponentially.

But then Waymo discovered that transformer-based networks were a good way to solve this kind of problem.

“In driving scenarios, road users may be likened to participants in a constant dialogue, continuously exchanging a dynamic series of actions and reactions mirroring the fluidity of communication,” Waymo researchers wrote in a 2023 research paper.

Just as a language model outputs a series of tokens representing text, Waymo’s vehicle prediction model outputs a series of tokens representing vehicle trajectories—things like “maintain speed and direction,” “turn 5 degrees left,” or “slow down by 3 mph”.

Rather than trying to explicitly formulate a series of rules for vehicles to follow (like “stay in your lane” and “don’t hit other vehicles”), Waymo trained the model like an LLM. The model learned the rules of driving by trying to predict the trajectories of human-driven vehicles on real roads.

This data-driven approach allowed the model to learn subtleties of vehicle interactions that are not described in any driver manual and would be hard to capture with explicit computer code.

Using richer data with end-to-end training

Before a self-driving system can predict what happens next, it needs to know what the world looks like right now. That’s the job of the perception module, the first stage of any self-driving pipeline. It tries to take noisy data from a vehicle’s sensors and boil it down to a simple model that looks like the diagram in the previous section.

Shoehorning a messy world into a limited number of categories means tossing away a lot of potentially relevant information. A self-driving system might represent a pedestrian with a bounding box and the label “pedestrian.” But human drivers can glean a lot more information by actually looking at the pedestrian.

Is she looking down at her phone, over her shoulder, or up at the sky? Is she holding up her arm to hail a taxi? Is she walking a dog? Pushing a stroller? Carrying an armful of packages? All of this information might help predict what she’ll do next, but it might not be captured by a conventional world model.

An autonomous vehicle will sometimes encounter objects—such as tumbleweeds, bags of trash, or discarded furniture—that haven’t been assigned a specific category by the perception system. And the road itself might have features like potholes, puddles, or faded lane markings that are difficult to represent with bounding boxes or a fixed list of attributes.

In April, Waymo published a paper describing a more flexible and open-ended way to represent a driving scene.

If you read my explainer last year on large language models, you know that LLMs represent words using word vectors that locate each word in an imaginary space with thousands of dimensions. Waymo’s new perception system does something similar, producing an “object vector” for every car, pedestrian, mailbox, and other object in the driving scene.

The crucial innovation here is that Waymo researchers don’t decide what information to include in the object vector. Rather, the network learns the best format for representing objects during the training process.

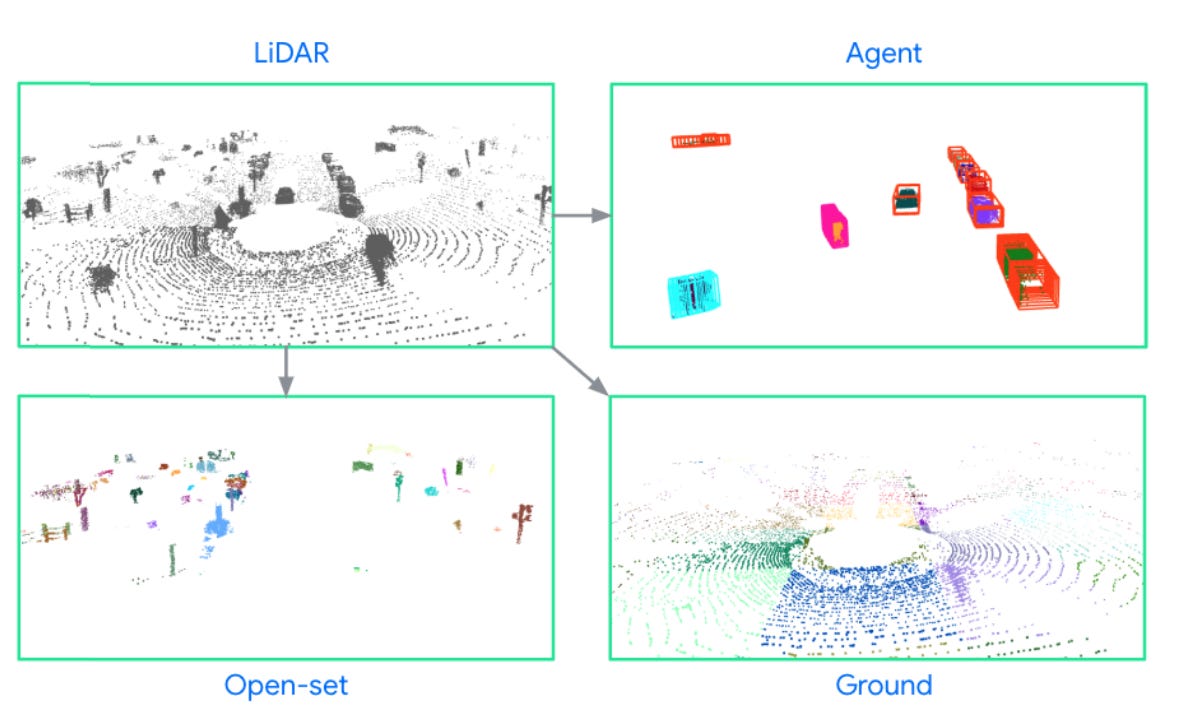

First the perception system divides the world into three categories: agents (which includes cars and pedestrians), open-set objects (like traffic cones and mailboxes), and road surfaces (which are divided into patches ten meters square). It does this using a pre-trained image model that already knows what cars, pedestrians, and traffic cones look like.

Once it has a list of scene elements, the perception system uses a second neural network to assign each element an “object vector.” To a human, these vectors are just an inscrutable list of numbers. But those numbers are optimized to capture the information that will be most helpful to the prediction network that will be using the information.

Some dimensions of the vector will likely indicate whether it’s a fire truck, a stop sign, a tree trunk, or something else. Other dimensions will represent subtler attributes of objects—the kind of information that might have been discarded by a conventional perception system. If the object is a pedestrian, for example, the vector might encode information about the position of the pedestrian’s head, arms, and legs.

Waymo trained the perception and prediction networks together in a process called end-to-end training. If the prediction network got the wrong answer on a training example, the training algorithm would not only adjust the weights of the prediction network, it would also send signals backwards to the perception network so its weights could be adjusted too. Over millions of training rounds, the perception network got better and better at extracting precisely the information the prediction network needed to correctly anticipate how vehicles and pedestrians move in real driving scenarios.

An industry-wide trend

Let’s take a step back and look at what Waymo did here. Previously Waymo had two separate systems—a perception system and a prediction system—that were designed by human scientists and operated independently. The scientists also decided what information should pass between these systems. This meant a lot of valuable information got discarded at the interface between the two modules.

In its recent work, Waymo has done two things:

Replace each human-designed system with a neural network that learned directly from training data.

Fuse the two networks together in an end-to-end training paradigm.

This second step means that instead of passing information between these networks in a format designed by a human scientist, the networks now communicate using an “object vector” format that was optimized during the training process to convey as much useful information as possible to the prediction network.

Across the self-driving industry, companies are making similar changes. They are taking parts of their autonomy stacks that were lovingly hand-crafted by human programmers and replacing them with neural networks that learn from large training sets. They’re also looking for ways to link networks together so they can be trained in an end-to-end fashion.

Elon Musk, for example, has described version 12 of Tesla’s Full Self Driving software as being based on “end-to-end neural nets.”

The startup Waabi describes its self-driving software as “an end-to-end trainable system that automatically learns from data.” The company recently published a research paper describing a single transformer-based network that could both detect objects and predict their future trajectories. It does a similar job to the Waymo networks I described earlier.

Why LLMs aren’t ready for driver’s licenses

If you pushed this trend to its logical conclusion, you’d get a single transformer-based network that performs the entire driving task. Such a network would take in sensor data on one side and produce driving commands on the other.

That’s the vision of Wayve, the British startup that raised $1 billion in May.

In a July presentation, Wayve principal scientist Oleg Sinavski surveyed a number of research efforts to enable LLMs to drive vehicles. One of these is LINGO-2, the “vision-language-action model” Wayve released in April.

Wayve hasn’t published a lot of details about how LINGO-2 works, but it seems to have a similar architecture to Google’s RT-2 robotics model. It takes images and words as inputs and produces tokens that tell a car when to turn the steering wheel and when to push the pedals.

And because it’s essentially an LLM, a passenger can chat with it, asking it to explain its decisions or even giving it instructions. In one demo, the user tells LINGO-2 “turning right, clear road” and the model turns right at the next intersection.

It makes for an impressive demo, but are we really about to have LLMs driving our cars?

Toward the end of the talk, Sinavski is admirably candid about the preliminary state of research in this area.

“There are a lot of unsolved problems,” he said. “This is all work in progress.”

One big problem Sinavski noted is that Wayve hasn’t found a vision-language model that’s “really good at spatial reasoning.” If you’re a long-time reader of Understanding AI, you might remember when I asked leading LLMs to tell the time from an analog clock or solve a maze. ChatGPT, Claude, and Gemini all failed because today’s foundation models are not good at thinking geometrically.

This seems like it would be a big downside for a model that’s supposed to drive a car. And I suspect it’s why Waymo’s perception system isn’t just one big network. Waymo still uses traditional computer code to divide the driving scene up into discrete objects and compute a numerical bounding box for each one. This kind of pre-processing gives the prediction network a head start as it reasons about what will happen next.

Another concern is that the opaque internals of LLMs make them difficult to debug. If a self-driving system makes a mistake, engineers want to be able to look under the hood and figure out what happened. That’s much easier to do in a system like Waymo’s, where some of the basic data structures (like the list of scene elements and their bounding boxes) were designed by human engineers.

But the broader point here is that self-driving companies do not face a binary choice between hand-crafted code or one big end-to-end network. The optimal self-driving architecture is likely to be a mix of different approaches. Companies will need to learn the best division of labor from trial and error.

So interesting and cool. Through your writing, I've come to be much more favorably disposed towards AI in general and FSD more specifically. But there's so much complexity in all of this. For FSD, a few issues/ideas emerge for me:

1. communication between agents. In the traffic example - a human driver turning left will make a judgement based on speed and direction of the oncoming car, but also if there's communication with the other driver. Can you see their face and are they paying attention? Did they flash their lights at you to signal that you can turn? In a different context - I tell my kids to make eye contact with a driver before crossing in front of them - even if they're stopped, I want them to acknowledge my kid (the pedestrian). If you don't make eye contact, don't cross.

2. Somewhat relatedly, I have come to be interested in the idea that traffic is a culture - or at least has cultural dimensions. I wrote a little blog about it: what it was like trying to cross a street in thailand with no traffic signals or crosswalks (https://niawag.substack.com/p/traffic-is-a-culture). When I first came to Washington DC, I noticed a traffic pattern that was totally unfamiliar to me: a left-turning driver was given right-of-way when a light changed. Not a whole column of drivers, but one car could turn left if they were signalling before the oncoming driver would go. It was a kind of courtesy, but I was totally unfamiliar with it. It is/was dangerous to participate in traffic and not know the rules - written and unwritten. I've been thinking about it because I want our traffic culture to be safer and to incorporate other vehicles (bikes, scooters, etc) better. But it's a transition and changing culture is hard. And yet another challenge for FSD.

"If a self-driving system makes a mistake, engineers want to be able to look under the hood and figure out what happened."

I see what you did there.