17 predictions for AI in 2026

AI will continue improving rapidly, but real-world economic impacts will be modest.

2025 has been a huge year for AI: a flurry of new models, broad adoption of coding agents, and exploding corporate investment were all major themes. It’s also been a big year for self-driving cars. Waymo tripled weekly rides, began driverless operations in several new cities, and started offering freeway service. Tesla launched robotaxi services in Austin and San Francisco.

What will 2026 bring? We asked eight friends of Understanding AI to contribute predictions, and threw another nine in ourselves. We give a confidence score for each prediction; a prediction with 90% confidence should be right nine times out of ten.

We don’t believe AI is a bubble on the verge of popping, but neither do we think we’re close to a “fast takeoff” driven by the invention of artificial general intelligence. Rather, we expect models to continue improving their capabilities — but we think it will take a while for the full impact to be felt across the economy.

1. Big Tech capital expenditures will exceed $500 billion (75%)

Timothy B. Lee

In 2024, the five main hyperscalers — Google, Microsoft, Amazon, Meta, and Oracle — had $241 billion in capital expenditures. This year, those same companies are on track to spend more than $400 billion.

This rapidly escalating spending is a big reason many people believe that there’s a bubble in the AI industry. As we’ve reported, tech companies are now investing more, as a percentage of the economy, than the peak year of spending on the Apollo Project or the Interstate Highway System. Many people believe that this level of spending is simply unsustainable.

But I don’t buy it. Industry leaders like Mark Zuckerberg and Satya Nadella have said they aren’t building these data centers to prepare for speculative future demand — they’re just racing to keep up with orders their customers are placing right now. Corporate America is excited about AI and spending unprecedented sums on new AI services.

I don’t expect Big Tech’s capital spending to grow as much in 2026 as it did in 2025, but I do expect it to grow, ultimately exceeding $500 billion for the year.

2. OpenAI and Anthropic will both hit their 2026 revenue goals (80%)

Timothy B. Lee

Anthropic and OpenAI have both enjoyed impressive revenue growth in 2025.

OpenAI expects to generate more than $13 billion for the calendar year, and to end the year with annual recurring revenue around $20 billion. A leaked internal document indicated OpenAI is aiming for $30 billion in revenue in 2026 — slightly more than double the 2025 figure.

Anthropic expects to generate around $4.7 billion in revenue in 2025. In October, the company said its annual recurring revenue had risen to “almost $7 billion.” The company is aiming for 2026 revenue of $15 billion.

I predict that both companies will hit these targets — and perhaps exceed them. The capabilities of AI models have improved a lot over the last year, and I expect there is a ton of room for businesses to automate parts of their operations even without new model capabilities.

3. The context windows of frontier models will stay around one million tokens (80%)

Kai Williams

LLMs have a “context window,” the maximum number of tokens they can process. A larger context window lets an LLM tackle more complex tasks, but it is more expensive to run.

When ChatGPT came out in November 2022, it could only process 8,192 tokens at once. Over the following year and a half, context windows from the major providers increased dramatically. OpenAI started offering a 128,000 token window with GPT-4 Turbo in November 2023. The same month, Anthropic released Claude 2.1, which offered 200,000 token windows. And Google started offering one million tokens of context with Gemini 1.5 Pro in February 2024 — which it later expanded to two million tokens.

Since then, progress has slowed. Anthropic has not changed its default context size since Claude 2.1.1 GPT-5.2 has a 400,000 token context window, but that’s less than GPT-4.1, released last April. And Google’s largest context window has shrunk to one million.

I expect context windows to stay fairly constant in 2026. As Tim explained in November, larger context window sizes brush up against limitations in the transformer architecture. For most tasks with current capabilities, smaller context windows are cheaper and just as effective. In 2026, there might be some coding-related LLMs — where it’s useful for the LLM to be able to read an entire codebase — that have larger context windows. But I predict the context lengths of general-purpose frontier models will stay about the same over the next year.

4. Real GDP will grow by less than 3.5% in the US (90%)

Timothy B. Lee

The year 2027 has acquired a totemic status in some corners of the AI world. In 2024, former OpenAI researcher Leopold Aschenbrenner penned a widely-read series of essays predicting a “fast takeoff” in 2027. Then in April 2025, an all-star team of researchers published AI 2027, a detailed forecast for rapid AI progress. They forecast that by the 2027 holiday season, GDP will be “ballooning.” One AI 2027 author suggested that this could eventually lead to annual GDP growth rates as high as 50%.

They don’t make a specific prediction about 2026, but if these predictions are close to right, we should start seeing signs of it by the end of 2026. If we’re on the cusp of an AI-powered takeoff, that should translate to above-average GDP growth, right?

So here’s my prediction: inflation-adjusted GDP in the third quarter of 2026 will not be more than 3.5% higher than the third quarter of 2025.2 Over the last decade, year-over-year GDP growth has only been faster than 3.5% in late 2021 and early 2022, a period when the economy was bouncing back from Covid. Outside of that period, year-over-year growth of real GDP has ranged from 1.4% to 3.4%.

I expect the AI industry to continue growing at a healthy pace, and this should provide a modest boost to the US economy. Indeed, data center construction has been supporting the economy over the last year. But I expect the boost from data center construction to be a fraction of one percent — not enough to push overall economic growth outside its normal range.

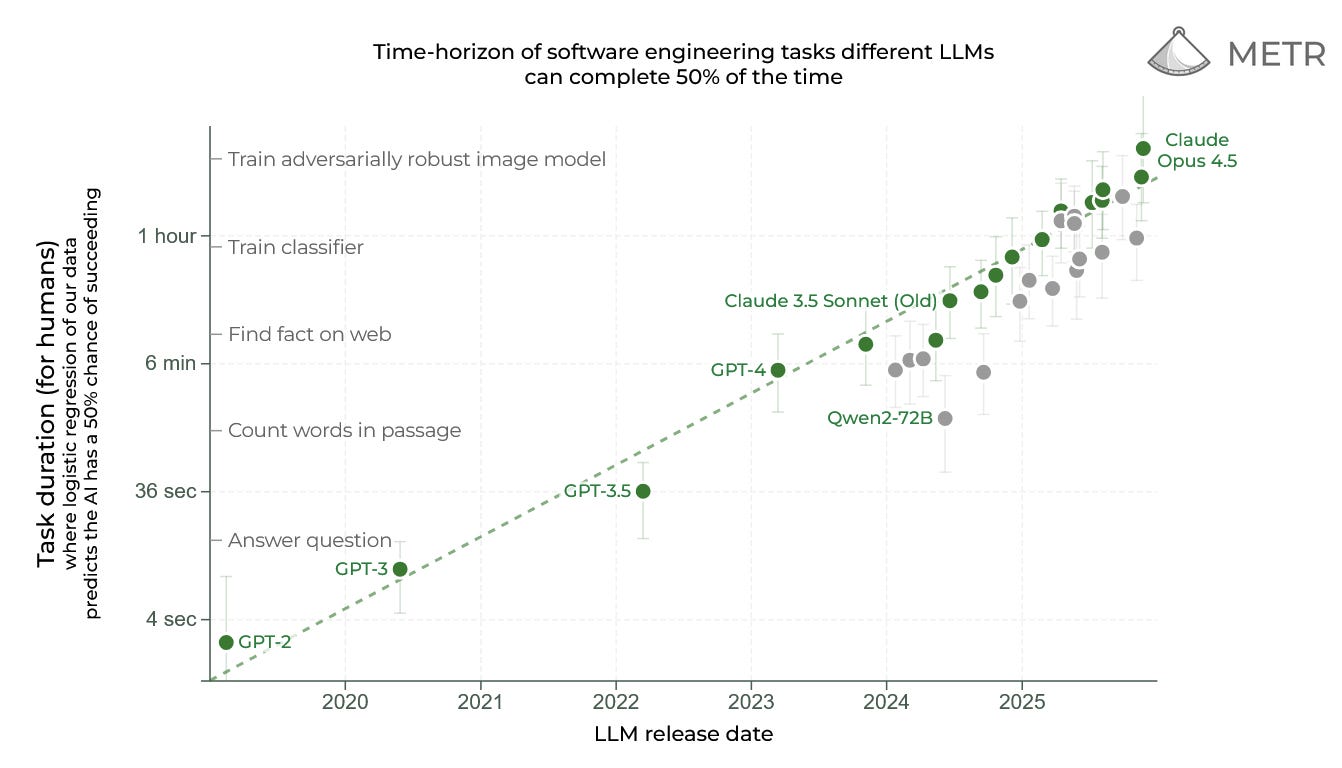

5. AI models will be able to complete 20-hour software engineering tasks (55%)

Kai Williams

The AI evaluation organization METR released the original version of this chart in March. They found that every seven months, the length of software engineering tasks that leading AI models were capable of completing (with a 50% success rate) was doubling. Note that the y-axis of this chart is on a log scale, so the straight line represents an exponential increase.

By mid-2025, LLM releases seemed to be improving more quickly, doubling successful task lengths in just five months. METR estimates that Claude Opus 4.5, released in November, could complete software tasks (with at least a 50% success rate) that took humans nearly five hours.

I predict that this faster trend will continue in 2026. AI companies will have access to significantly more computational resources in 2026 as the first gigawatt-scale clusters start operating early in the year, and LLM coding agents are starting to speed up AI development. Still, there are reasons to be skeptical. Both pre-training (with imitation learning) and post-training (with reinforcement learning) have shown diminishing returns.

Whatever happens, whether METR’s line will continue to hold is a crucial question. If the faster trend line holds, the strongest AI models will be at 50% reliability for 20-hour software tasks — half of a software engineer’s work week.

6. The legal free-for-all that characterized the first few years of the AI boom will be definitively over (70%)

James Grimmelmann, professor at Cornell Tech and Cornell Law School

So far, AI companies are winning against the lawsuits that pose truly existential threats — most notably, courts in the US, EU, and UK have all held that it’s not copyright infringement to train a model. But for everything else, the courts have been putting real operational limits on them. Anthropic is paying $1.5 billion to settle claims that it trained on downloads from shadow libraries, and multiple courts have held or suggested that they need real guardrails against infringing outputs.

I expect the same thing to happen beyond copyright, too: courts won’t enjoin AI companies out of existence, but they will impose serious high-dollar consequences if the companies don’t take reasonable steps to prevent easily predictable harms. It may still take a head on a pike — my money is on Perplexity’s — but I expect AI companies to get the message in 2026.

7. AI will not cause any catastrophes in 2026 (90%)

Steve Newman, author of Second Thoughts

There are credible concerns that AI could eventually enable various disaster scenarios. For instance, an advanced AI might help create a chemical or biological weapon, or carry out a devastating cyberattack. This isn’t entirely hypothetical; Anthropic recently uncovered a group using its agentic coding tools to carry out cyberattacks with minimal human supervision. And AIs are starting to exhibit advanced capabilities in these domains.

However, I do not believe there will be any major “AI catastrophe” in 2026. More precisely: there will be no unusual physical or economic catastrophe (dramatically larger than past incidents of a similar nature) in which AI plays a crucial enabling role. For instance, no unusually impactful bio, cyber, or chemical attack.

Why? It always takes longer than expected for technology to find practical applications — even bad applications. And AI model providers are taking steps to make it harder to misuse their models.

Of course, people may jump to blame AI for things that might have happened anyway, just as some tech CEOs blamed AI for layoffs that were triggered by over-hiring during Covid.

8. Major AI companies like OpenAI and Anthropic will stop investing in MCP (90%)

Andrew Lee, CEO of Tasklet (and Tim’s brother)

The Model Context Protocol was designed to give AI assistants a standardized way to interact with external tools and data sources. Since its introduction in late 2024, it has exploded in popularity.

But here’s the thing: modern LLMs are already smart enough to reason about how to use conventional APIs directly, given just a description of that API. And those descriptions that MCP servers provide? They’re already baked into the training data or accessible on public websites.

Agents built to access APIs directly can be simpler and more flexible, and they can connect to any service — not just the ones that support MCP.

By the end of 2026, I predict MCP will be seen as an unnecessary abstraction that adds complexity without meaningful benefit. Major vendors will stop investing in it.

9. A Chinese company will surpass Waymo in total global robotaxi fleet size (55%)

Daniel Abreu Marques, author of The AV Market Strategist

Waymo has world-class autonomy, broad regulatory acceptance, and a maturing multi-city playbook. But vehicle availability remains a major bottleneck. Waymo is scheduled to begin using vehicles from the Chinese automaker Zeekr in the coming months, but tariff barriers and geopolitical pressures will limit the size of its Zeekr-based fleet. Waymo has also signed a deal with Hyundai, but volume production likely won’t begin until after 2026. So for the next year, fleet growth will remain incremental.

Chinese AV players operate under a different set of constraints. Companies like Pony.ai, Baidu Apollo Go, and WeRide have already demonstrated mass-production capability. For example, when Pony rolled out its Gen-7 platform, it reduced its bill of materials cost by 70%. Chinese companies are scaling fleets across China, the Middle East, and Europe simultaneously.

At the moment, Waymo has about 2,500 vehicles in its commercial fleet. The biggest Chinese company is probably Pony.ai, with around 1,000 vehicles. Pony.ai is aiming for 3,000 vehicles by the end of 2026, while Waymo will need 4,000 to 6,000 vehicles to meet its year-end goal of one million weekly rides.

But if Waymo’s supply chain ramps slower than expected due to unforeseen problems or delays — and Chinese players continue to ramp up production volume — then at least one of them could surpass Waymo in total global robotaxi fleet size by the end of 2026.

10. The first fully autonomous vehicle will be sold to consumers — but it won’t be from Tesla (75%)

Sophia Tung, content editor of the Ride AI newsletter

Currently many customer-owned vehicles have advanced driverless systems (known as “level two” in industry jargon), but none are capable of fully driverless operations (“level four”). I predict that will change in 2026: you’ll be able to buy a car that’s capable of operating with no one behind the wheel — at least in some limited areas.

One company that might offer such a vehicle is Tensor, formerly AutoX. Tensor is working with younger, more eager automakers that already ship vehicles in the US, like VinFast, to manufacture and integrate their vehicles. The manufacturing hurdles, while significant, are not insurmountable.

Many people expect Tesla to ship the first fully driverless customer-owned vehicle, but I think that’s unlikely. Tesla is in a fairly comfortable position. Its driver-assistance system performs well enough most of the time. Users believe it is “pretty much” a fully driverless system. Being years behind Waymo in the robotaxi market hasn’t hurt Tesla’s credibility with its fans. So Tesla can probably retain the loyalty of its customers even if a little-known startup like Tensor introduces a customer-owned driverless vehicle before Tesla enables driverless operation for its customers.

Tensor has a vested interest in being first and flashiest in the market. It could launch a vehicle that can operate with no driver within a very limited area and credibly claim a first-to-market win. Tensor runs driverless robotaxi testing programs and therefore understands the risks involved. Tesla, in contrast, probably does not want to assume liability or responsibility for accidents caused by its system. So I expect Tesla to wait, observe how Tensor performs, and then adjust its own strategy accordingly.

11. Tesla will begin offering a truly driverless taxi service to the general public in at least one city (70%)

Timothy B. Lee

In June, Tesla delivered on Elon Musk’s promise to launch a driverless taxi service in Austin. But it did so in a sneaky way. There was no one in the driver’s seat, but every Robotaxi had a safety monitor in the passenger seat. When Tesla began offering Robotaxi rides in the San Francisco Bay Area, those vehicles had safety drivers.

It was the latest example of Elon Musk overpromising and underdelivering on self-driving technology. This has led many Tesla skeptics to dismiss Tesla’s self-driving program entirely, arguing that Tesla’s current approach simply isn’t capable of full autonomy.

I don’t buy it. Elon Musk tends to achieve ambitious technical goals eventually. And Tesla has been making genuine progress on its self-driving technology. Indeed, in mid-December, videos started to circulate showing Teslas on public roads with no one inside. I think that suggests that Tesla is nearly ready to debut genuinely driverless vehicles, with no Tesla employees anywhere in the vehicle.

Before Tesla fans get too excited, it’s worth noting that Waymo began its first fully driverless service in 2020. Despite that, Waymo didn’t expand commercial service to a second city — San Francisco — until 2023. Waymo’s earliest driverless vehicles were extremely cautious and relied heavily on remote assistance, making rapid expansion impractical. I expect the same will be true for Tesla — the first truly driverless Robotaxis will arrive in 2026, but technical and logistical challenges will limit how rapidly they expand.

12. Text diffusion models will hit the mainstream (75%)

Kai Williams

Current LLMs are autoregressive, which means they generate tokens one at a time. But this isn’t the only way that AI models can produce outputs. Another type of generation is diffusion. The basic idea is to train the model to progressively remove noise from an input. When paired with a prompt, a diffusion model can turn random noise into solid outputs.

For a while, diffusion models were the standard way to make image models, but it wasn’t as clear how to adapt that to text models. In 2025, this changed. In February, the startup Inception Labs released Mercury, a text diffusion model aimed at coding. In May, Google announced Gemini Diffusion as a beta release.

Diffusion models have several key advantages over standard models. For one, they’re much faster because they generate many tokens at once. They also might learn from data more efficiently, at least according to a July study by Carnegie Mellon researchers.

While I don’t expect diffusion models to supplant autoregressive models, I think there will be more interest in this space, with at least one established lab (Chinese or American) releasing a diffusion-based LLM for mainstream use.

13. There will be an anti-AI super PAC that raises at least $20 million (70%)

Charlie Guo, author of Artificial Ignorance

AI has become a vessel for a number of different anxieties: misinformation, surveillance, psychosis, water usage, and “Big Tech” power in general. As a result, opposition to AI is quickly becoming a bipartisan issue. One example: back in June, Ted Cruz attempted to add an AI regulation moratorium to the budget reconciliation bill (not unlike President Trump’s recent executive order), but it failed 99-1.

Interestingly, there are at least two well-funded pro-AI super PACs:

Leading The Future, with over $100 million from prominent Silicon Valley investors, and

Meta California, with tens of millions from Facebook’s parent company.

Meanwhile, there’s no equally organized counterweight on the anti-AI side. This feels like an unstable equilibrium, and I expect to see a group solely dedicated to lobbying against AI-friendly policies by the end of 2026.

14. News coverage linking AI to suicide will triple — but actual suicides will not (85%)

Abi Olvera, author of Positive Sum

We’ve already seen extensive media coverage of cases like the Character.AI lawsuit, where a teen’s death became national news. I expect suicides involving LLMs to generate even more media attention in 2026. Specifically, I predict that news mentions of “AI” and “suicide” in media databases will be at least three times higher in 2026 than in 2025.

But increased coverage doesn’t mean increased deaths. The US suicide rate will likely continue on its baseline trends.

The US suicide rate is currently near a historic peak after a mostly steady rise since 2000. While the rate remained high through 2023, recent data shows a meaningful decrease in 2024. I expect suicide rates to stay stable or lower, reverting back toward average away from the 2018 and 2022 peaks.

15. The American open frontier will catch up to Chinese models (60%)

Florian Brand, editor at the Interconnects newsletter

In late 2024, Qwen 2.5, made by the Chinese firm Alibaba, surpassed the best American open model Llama 3. In 2025, we got a lot of insanely good Chinese models — DeepSeek R1, Qwen3, Kimi K2 — and American open models fell behind. Meta’s Llama 4, Google’s Gemma 3, and other releases were good models for their size, but didn’t reach the frontier. American investment in open weights started to flag; there have been rumors since the summer that Meta is switching to closed models.

But things could change next year. Through advocacy like the ATOM Project (led by Nathan Lambert, the founder of Interconnects), more Western companies have indicated interest in building open-weight models. In late 2025, there has been an uptick in solid American/Western open model releases like Mistral 3, Olmo 3, Rnj, and Trinity. Right now, those models are behind in raw performance, but I predict that this will change in 2026 as Western labs keep up their current momentum. American companies still have substantial resources, and organizations like Nvidia — which announced in December it would release a 500 billion parameter model — seem ready to invest.

16. Vibes will have more active users than Sora in a year (70%)

Kai Williams

This fall, OpenAI and Meta both released platforms for short-form AI-generated video. Initially, Sora caught all of the positive attention: the app came with a new video generation model and a clever mechanic around making deepfakes of your friends. Meta’s Vibes initially fell flat. Sora quickly became the number one app in Apple’s App Store, while the Meta AI app, which includes Vibes, languished around position 75.

Today, however, the momentum has seemed to shift. Sora’s initial excitement has seemed to wear off as the novelty of AI videos faded. Meanwhile, Vibes has been growing, albeit slowly, hitting two million daily active users in mid-November, according to Business Insider. Today, the Meta AI app ranks higher on the App Store than Sora.

I think this reversal will continue. From personal experience, Sora’s recommendation algorithm seems very clunky, and Meta is very skilled at building compelling products that grow its user base. I wouldn’t count out Mark Zuckerberg when it comes to growing a social media app.

17. Counterpoint: Sora will have more active users than Vibes in a year (65%)

Timothy B. Lee

This is one of the few places where Kai and I disagreed, so I thought it would be fun to air both sides of the argument.

I was initially impressed by Sora’s clever product design, but the app hasn’t held my attention since my October writeup. However, toward the end of that writeup I said this:

I expect the jokes to get funnier as the Sora audience grows. Another obvious direction is licensing content from Hollywood. I expect many users would love to put themselves into scenes involving Harry Potter, Star Wars, or other famous fictional worlds. Right now, Sora tersely declines such requests due to copyright concerns. But that could change if OpenAI writes big enough checks to the owners of these franchises.

This is exactly what happened. OpenAI just signed a licensing agreement with Disney to let users make videos of themselves with Disney-owned characters. It’s exclusive for the first year. I expect this to greatly increase interest in Sora, because while making fake videos of yourself is lame, making videos of yourself interacting with Luke Skywalker or Iron Man is going to be more appealing.

I doubt users will react well if they’re just given a blank prompt field to fill out, so fully exploiting this opportunity will require clever product design. But Sam Altman has shown a lot of skill at turning promising AI models into compelling products. There’s no guarantee he’ll be able to do this with Sora, but I’m guessing he’ll figure it out.

Anthropic does offer a million token context window in beta testing for Sonnet 4 and Sonnet 4.5.

I’m focusing on Q3 numbers because we don’t typically get GDP data for the fourth quarter until late January, which is too late for a year-end article like this.

Thanks for having me, Tim! I agree that the context windows of frontier models will stay around one million tokens, but for slightly different reasons - it's becoming more effective to invest in managing contexts that hit one million tokens. See Claude Code's auto-compaction tools and OpenAI's /compact API endpoint.

What a year it has been -- and there are no signs of the next year being any slowdown, either. Lets see what the year will bring, happy new year and thanks a lot for having me! :)