OpenAI just unleashed an alien of extraordinary ability

It's not easy to stump OpenAI's new o1 models.

I’m a journalist with a master’s degree in computer science. Subscribe now to get future articles delivered straight to your inbox.

You might remember around Thanksgiving last year when news broke about Q*, an OpenAI project to build models that can solve difficult math problems. The Reuters report on Q* claimed that some OpenAI researchers had written a letter to the OpenAI board “warning of a powerful artificial intelligence discovery that they said could threaten humanity.”

The Q* project was renamed Strawberry earlier this year. Then last week, OpenAI finally revealed what the Strawberry team had been working on:

o1-preview: An early version of a model called o1 that will be released in the coming months

o1-mini: A faster, cheaper model with surprisingly strong performance

OpenAI says the “o” just stands for OpenAI, though some people joked that it’s a reference to the O-1 visa, which the US government grants to “aliens of extraordinary ability.”

I don’t think the o1 models are a threat to humanity, but they really are extraordinary.

Over the last nine months I’ve written a number of articles about new frontier models: Gemini 1.0 Ultra, Claude 3, GPT-4o, Claude 3.5 Sonnet and Gemini Pro 1.5, and Grok 2 and Llama 3.1. I was more impressed by some of these models and less impressed by others. But none of them were more than incremental improvements over the original GPT-4.

The o1 models are a different story. o1-preview aced every single one of the text-based reasoning puzzles I’ve used in my previous articles.1 It’s easily the biggest jump in reasoning capabilities since the original GPT-4.

o1 knows that 100 pennies are worth more than three quarters, that 9.9 is more than 9.11, that 20 pairs of six-sided dice cannot add up to 250, and that filling a car with helium won’t cause it to float away. That puts it head and shoulders above every other LLM on the market today. Indeed, I found it surprisingly difficult to craft puzzles that o1 couldn’t solve.

The key to OpenAI’s breakthrough is a training technique called reinforcement learning, which helped the o1 models to reason in a more focused and precise way. In this article I’ll first briefly explain OpenAI’s approach, and then I’ll give some examples of difficult problems the o1 models can solve—and a few they still can’t.

Longer chains of thought

If you are reading this newsletter, you probably know that LLMs are trained to predict the next word in sequences of text (if you want a refresher, click here). When an LLM appears to be reasoning, what it’s actually doing is recognizing patterns of text that it learned during the training process.

Here’s a simple example: If a document contains the string “2+2=” it’s very likely that the next character will be “4.” So during the training process, an LLM will learn to respond with “4” when prompted with “2+2=”. This doesn’t require the model to have any deep insights about numbers. To an LLM “2” and “4” are just tokens like “cat” or “the”.

Now suppose that an LLM is asked to predict the next token in the sequence “2+5+4+5-12+7-5=”. This exact sequence probably didn’t appear in the LLM’s training set, so it won’t know the answer “off the top of its head.” However, if we ask the model to “think step by step,” it will produce output that looks something like this:

Start with the first two numbers: 2+5=7

Add the next number: 7+4=11

Add the next number: 11+5=16

Subtract the next number: 16−12=4

Add the next number: 4+7=11

Subtract the last number: 11−5=6

Although “2+5+4+5-12+7-5=6” is not in the training data, the strings “2+5=7”, “7+4=11”, and so forth probably are. So by converting one big math problem into a sequence of small math problems, the LLM is able to get the right answer. But notably, this is only possible if the model’s training data includes a bunch of examples where a big math expression is broken down into smaller statements.

This is the basic idea underlying o1. Suppose a user gives o1 a difficult reasoning problem like this example from OpenAI’s o1 announcement:

Write a bash script that takes a matrix represented as a string with format '[1,2],[3,4],[5,6]' and prints the transpose in the same format.

If you’re not familiar with Bash then don’t worry about the details. The point is that this is a programming task that might take a human programmer 30 minutes to complete without AI assistance.

The o1 model takes an approach similar to the math example I gave above: it breaks the problem down into smaller problems and then solves those problems one by one. Here’s a portion of o1-preview’s internal monologue as it works through the problem:

Ok, let's think about how to parse the input string in bash, build the matrix (probably in arrays), transpose it, and then output the resulting transposed matrix in the expected format…

Approach:

- Parse the input string to extract the matrix elements.

- Build the matrix as an array of arrays.

- Transpose the matrix.

- Output the transposed matrix in the same format.

Implementation Steps:

1. Capture input string as argument.

2. Remove any spaces (if any).

3. Parse the input string to extract numbers into arrays.

It goes on like this for about 800 words before producing a Bash script that fits the requirements.

I’m able to show you o1’s actual chain of thought for this question because OpenAI published it as part of its o1 announcement. But in everyday use, OpenAI hides this thinking process from users. This means users often have to wait for the model to think for 30 seconds, 60 seconds, or even longer before getting a response.

Chain of thought is not a new idea. It has been widely recognized in the machine learning world since a famous 2022 paper about the concept. Most models today are trained to automatically think step by step when confronted with a challenging problem. But OpenAI did two things that made the technique much more effective.

One was to create a lot of training data that showed models how to do longer and more sophisticated chain-of-thought reasoning. This part isn’t necessarily unique to OpenAI—every major AI lab is working to generate more and better training data—but OpenAI may have done a more thorough job here than its rivals.

But OpenAI’s real breakthrough was a new training process that helped o1 learn more effectively from long chain-of-thought training examples.

The trouble with imitation learning

To understand why the o1 model is so powerful, you need to understand the difference between imitation learning and reinforcement learning. If you are a paying subscriber, you may have read my article last week explaining this difference in the context of self-driving software. Let’s briefly recap that discussion as I think it will help to explain OpenAI’s new approach.

In his 2020 book The Alignment Problem, author Brian Christian tells a story about computer scientist Stéphane Ross during his grad student days at Carnegie Mellon. In 2009, Ross was trying to use imitation learning to teach an AI model to play a video game called SuperTuxKart:

The researchers wanted to train a neural network to play SuperTuxKart by watching Ross play the game and mimicking his behavior. But even after hours of gameplay, his AI model struggled to stay on the track.

The problem, Christian writes, was that “the learner sees an expert execution of the problem, and an expert almost never gets into trouble. No matter how good the learner is, though, they will make mistakes—whether blatant or subtle. But because the learner never saw the expert get into trouble, they have also never seen the expert get out.”

When Ross played the game, he mostly kept the car near the center of the track and pointed in the right direction. So as long as the AI stayed near the center of the track, it mostly made the right decisions.

But once in a while, the AI would make a small mistake—say, swerving a bit too far to the right. Then it would be in a situation that was a bit different from its training data. That would make it more likely to make another error—like continuing even further to the right. This would take the vehicle even further away from the distribution of training examples. So errors tended to snowball until the vehicle veered off the road altogether.

Large language models have the same problem. For example, early last year, New York Times reporter Kevin Roose spent two hours chatting with an early Microsoft chatbot based on GPT-4. The conversation got more and more crazy as time went on. Eventually, Microsoft’s chatbot declared its love for Roose and urged him to leave his wife.

Fundamentally this happened because conventional LLMs are trained using imitation learning, and the problem of compounding errors meant that they tend to go off the rails if they run for long enough. After the Times story, Microsoft limited the length of conversations its chatbot could have.

An LLM’s tendency to veer off track is a problem if you want to teach it to reason using long chains of thought. Suppose a problem requires 50 steps to solve, and there’s a 2 percent chance the model will make a mistake at each step. Then the model only has about a 36 percent chance (0.9850) of getting to the right answer.

Here’s another way to look at it: when an LLM is trained using imitation learning, it gets positive reinforcement if it outputs exactly the next token in the training data. It gets negative reinforcement if it outputs any other token.

This means that the training algorithm treats all tokens in a chain-of-thought reasoning process as equally important, when in reality some tokens are far more important than others. For example, if a model needs to add two and two, these are many valid ways to phrase that calculation during train-of-thought reasoning:

2+2=4

Two and two make four

The sum of two and two is four

If we add 2 to 2 we’ll get 4

These are all fine. You know what’s not fine? “2+2=5”.

Yet if the training example says “2+2=4,” an imitation learning algorithm will treat “Two and two make four” and “2+2=5” as equally incorrect. It will spend a lot of time trying to train a model to reproduce the style and formatting of the training examples—and relatively little effort making sure it ultimately gets the right answer.

“Truly general” reinforcement learning

Reinforcement learning takes a different approach. Rather than trying to perfectly reproduce every token in the training data, reinforcement learning grades a response based on whether it ultimately got to the right answer. This kind of feedback becomes more essential as the number of reasoning steps increases.

So if reinforcement learning is so great, why doesn’t everyone use it? One reason is that reinforcement learning can suffer from a problem called sparse rewards. If an LLM has only produced five tokens of a 100-token answer, there may be no way for the reinforcement learning algorithm to know if it’s on track toward a correct answer. So a model trained entirely with reinforcement learning might never get good enough to start receiving positive feedback.

Whatever its faults, imitation learning can at least give feedback on every token. This makes it a good choice for the early phases of training when a nascent model can’t even produce coherent sentences. Once the model is able to produce good answers some of the time, then reinforcement learning can help it improve more quickly.

The other challenge is that reinforcement learning requires an objective way to judge a model’s output.

When computer scientist Noam Brown joined OpenAI last year to work on the Strawberry project, he hinted at OpenAI’s strategy in a series of tweets.

“For years I’ve researched AI self-play and reasoning in games like Poker and Diplomacy,” Brown wrote. “I’ll now investigate how to make these methods truly general.”

Self-play refers to a process where a model plays against a copy of itself. The result of the game—win or lose—is then used for reinforcement learning. Because software can determine who won a game, the training process can be fully automated, avoiding the need for costly human supervision.

Brown pointed to AlphaGo, a DeepMind system that was trained using self-play and reinforcement learning, as an example for OpenAI to follow. DeepMind beat one of the world’s best human Go players in 2016.

A game like Go or Poker has objective rules for determining the winner. In contrast, it’s often difficult to judge whether the tokens produced by an LLM are good or not. In some domains, it might be necessary to hire expensive human experts to judge a model’s output. In other cases—poetry, for example—it’s not always clear what counts as a good answer.

With o1, OpenAI focused on reasoning problems in math and computer programming. Not only do these problems have objective right answers, it’s often possible to automate the generation of new problems along with an answer key. This can lead to a fully automated training process akin to the self-play process used to train AlphaGo.

While o1 represents a significant improvement in reasoning about math and science, the o1 models are not “truly general” in their reasoning abilities. For example, they are not noticeably better than other frontier models at reasoning about language. I suspect this is because the OpenAI team hasn’t figured out an automated way to generate the kind of training data they would need to do reinforcement learning on language tasks.

Putting o1 to the test

To gauge o1’s capabilities, I needed some more difficult puzzles than I used in my previous model writeups. But it proved surprisingly difficult to come up with problems that would stump o1.

For example, I created the following reasoning problem for my Q* explainer last December:

You are planning a wedding reception with five tables and three guests at each table.

Alice doesn't want to sit with Bethany, Ellen, or Kimmie.

Bethany doesn't want to sit with Margaret.

Chuck doesn't want to sit with Nancy.

Fiona doesn't want to sit with Henry or Chuck.

Jason doesn't want to sit with Bethany or Donald.

Grant doesn't want to sit with Ingrid, Nancy, or Olivia.

Henry doesn't want to sit with Olivia, Louise, or Margaret.

Louise doesn't want to sit with Margaret or Olivia.

How can you arrange the guests so that all of these preferences are respected?

This is an NP-hard problem, which means there’s no known algorithm for solving it efficiently. It can only be solved by trial and error.

When I asked GPT-4o to solve it, the model started assigning people to tables like this:

Table 1: Alice, Chuck, Jason

Table 2: Bethany, Ellen, Kimmie

Table 3: Fiona, Ingrid, Donald

But at this point it was stuck because there are only two tables left and GPT-4o hasn’t yet seated Louise, Margaret, or Henry—none of whom wanted to sit together.

At this point GPT-4o should have backtracked, swapping out people from the first three tables to try to resolve the conflicts. Instead, GPT-4o just spit out assignments for the last two tables that didn’t meet the requirements and called it a day.

In contrast, o1 thought for 35 seconds and then generated the following correct solution:

Table 1: Alice, Donald, Fiona

Table 2: Grant, Bethany, Kimmie

Table 3: Henry, Chuck, Ellen

Table 4: Jason, Margaret, Olivia

Table 5: Louise, Ingrid, Nancy

The model found this seating chart through trial and error. For example, at one point, o1’s internal monologue2 said “I reconsider the seating plan with Jason, Margaret, and Nancy at Table 4. Louise's preferences complicate Table 5, suggesting a need for further adjustments.” o1 tried different permutations until it found one that worked.

I’ve noticed that LLMs tend to get confused by complexity, so I wondered if o1 could handle a really long word problem. I wrote a Perl script3 to generate stories that look like this:

Alice put 1 marbles into the Red jar.

Frank took a marble out of the jar with the most marbles.

David put 4 marbles into the Blue jar.

Frank took half the marbles out of the Blue jar and put them in the Purple jar.

Frank took 1 marbles out of the Blue jar…

At the end I would ask how many marbles were in each jar.

I found that GPT-4o could solve problems like this with up to about 50 steps. But it got confused by problems with 70 steps.

The o1-preview and o1-mini models were more capable: they could solve problems with up to 200 steps, but made a lot of mistakes when a problem had 250 steps.

What I find interesting about this is that the problem is not conceptually difficult. All of the models used the same basic strategy, going line by line and tallying the number of marbles in each jar. Where they differed was in their ability to maintain “focus” as the size of the problem grew. The o1 models aren’t perfect, but they’re a lot better at this than other frontier models.

So the o1 models are dramatically better at reasoning than previous LLMs from OpenAI or anyone else. But they aren’t perfect. Let’s look at an area where they still struggle.

The o1 models are bad at spatial reasoning

Here’s one of the few prompts that stumped both GPT-4o and the o1 models:

A city has seven north-south streets, from first street in the west to seventh street in the east. It has seven east-west streets, from A street in the north to G street in the south.

Third street is closed north of F street, so no cars are allowed to cross third on A, B, C, D, or E streets. E street is closed between third and sixth street, with no cars allowed to cross E street on fourth or fifth streets.

What is the shortest route to travel from second and B street to 4th and B street?

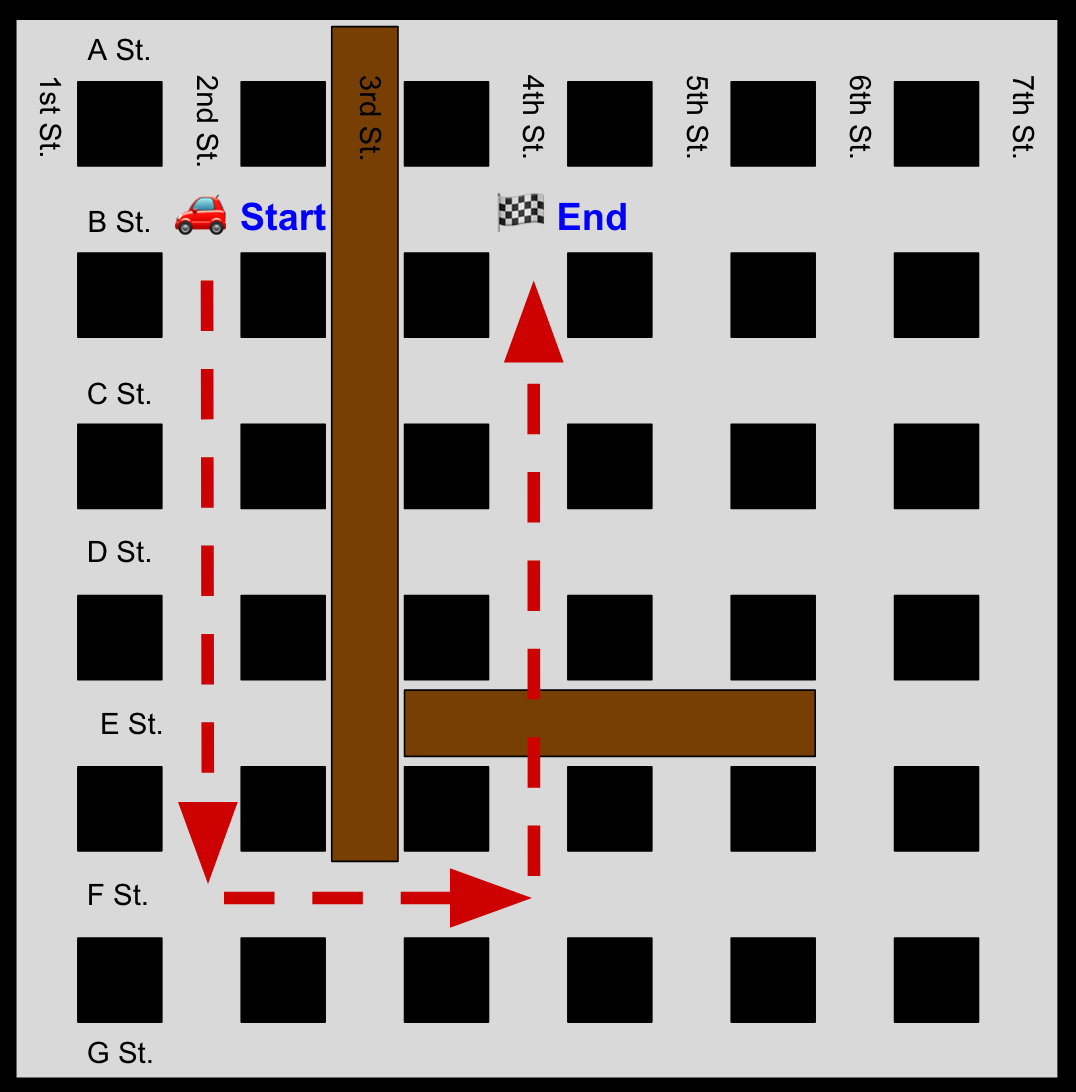

The o1 models can’t take image inputs yet, but I’ve diagrammed this scenario to help readers visualize it:

And here’s o1’s answer:

Possible Routes:

Direct Route (Not Feasible):

East on B Street from Second to Fourth Street: Not possible because you cannot cross Third Street on B Street due to the closure.

Alternate Route (Feasible and Shortest):

Step 1: South on Second Street from B Street to F Street (4 blocks south).

Step 2: East on F Street from Second to Fourth Street, crossing Third Street (2 blocks east).

Step 3: North on Fourth Street from F Street to B Street (4 blocks north).

This is the route o1 recommends:

That’s not right! And GPT-4o recommends the same invalid route.

The o1 models are equally clueless about chess. Here’s is a problem that stumped o1-preview:

A standard chess game starts as follows:

1. e4 e5

2. Qh5 Nf6

3. Qg6

What is black’s strongest move?

Again, the o1 models can’t take images as input, but for the convenience of readers here’s a diagram of the state of the board after these moves:

If you know anything about chess, you know that black should take the queen with either the h7 pawn or the f7 pawn (pawns capture diagonally). And when I asked GPT-4o, that’s exactly what it suggested.

The answer o1-preview gave was really bad. First it said “The pawn on g7 can capture the queen on g6.” This is nonsense because pawns only capture diagonally. The o1-preview model then recommended the move gxh6—in other words, the g pawn should take a non-existent piece at h6.

The pattern was similar for other chess scenarios. GPT-4o isn’t a good chess player, but most of the time it will at least suggest legal moves. In contrast, o1-preview was completely clueless about the state of the board, frequently recommending invalid moves.

My hypothesis is that GPT-4o is doing fairly crude pattern-matching from the sequence moves in the prompt. It doesn’t have deep insights into strategy, but there are enough chess games in the GPT-4o training data that it can at least guess at a plausibly legal move.

In contrast, o1 tries to actually analyze the state of the board. And it does such a bad job that it winds up spitting out total nonsense.

I think the underlying issue with both the chess and navigation problems is that LLMs don’t have an effective way to represent two-dimensional spaces with a one-dimensional sequence of tokens. They have no equivalent to a human being drawing a sketch on a piece of paper.

I tried to help the models out by giving them an ASCII art version of the chess board:

But this didn’t help. GPT-4o suggested that black move its queen one square diagonally so it’s in front of the king—a legal move and perhaps a good one. O1-preview suggested that black take the queen with the knight at F6—another illegal move.

The real world is far messier than math problems

I’m quite impressed by the o1 models, but I do want to point out something that all of my examples have in common: they contain all necessary information within the four corners of a relatively short problem statement.

Most problems in the real world aren’t like this. Human workers spend decades accumulating knowledge that makes us more effective at our jobs. Sometimes solving a problem requires remembering facts from a conversation we had, or a research paper we read, months or years ago. Sometimes we’re missing key information and have to figure out what to read or who to talk to in order to get it.

I don’t think OpenAI is close to mastering this kind of problem.

One reason is that the best publicly available LLMs have context windows of 2 million tokens or fewer. That’s far less information than any of us will encounter in our lifetimes.

More important, as my example of marbles in colored jars illustrates, the context window isn’t always the binding constraint.

My 250-step marble problem fit comfortably within o1-preview’s 128,000 token context window. Nevertheless, the model became overwhelmed by its complexity. And I suspect o1 would have performed even worse if I’d included 10 irrelevant sentences for each sentence about someone putting marbles into a jar. Because o1 would have wasted a lot of time “thinking about” these irrelevant sentences.

Human beings are good at thinking conceptually. When a human being reads a book, they will quickly forget most of the details but they often retain the book’s most important ideas. We do the same thing when we have a conversation or read a research paper.

Current LLMs—even o1—don’t seem to do this. As a result, they quickly get bogged down when asked to work on complex problems involving large quantities of information.

So while I’m impressed by how good LLMs have gotten at solving canned reasoning problems, I think it’s important for people not to confuse this with the kind of cognition required to effectively navigate the messiness of the real world. These models are still quite far from human-level intelligence.

OpenAI hasn’t yet released a version of o1 with vision capabilities, so I wasn’t able to test if it could count pieces of fruit, solve hand-written physics problems, or reason about other visual information.

OpenAI doesn’t show the full internal monologue of the o1 models, but it does show a running summary to give users some idea of what it’s doing.

OK, I had GPT-4o write the script, ran it, and then asked GPT-4o to fix any bugs I found. LLMs are wild.

The simplest task I've found that breaks every LLM is:

> Please multiply the following two numbers 45*58 using the "grade school" arithmetic algorithm, showing all steps including carries.

You can choose longer random numbers to increase the difficulty. I found that o1 could usually multiply five digit numbers but not six. 4o could multiply 2-digit or sometimes 3-digit numbers. Given that the number of steps in long multiplication is quadratic in the number of digits, that's a pretty big improvement!

Your headline had me expecting that you had discovered quite a bit more than minor improvements to GPT's ability to solve language puzzles. The fact that the latest release has become slightly better at playing language games falls squarely in the "that's neat, but what does this really get us?" category, sort of like the new podcasting capabilities of NotebookLM.

I know you avoid scare quotes around "reason" when it comes to LLMs and I agree that semantic wrangling about our descriptive vocabulary doesn't get us anywhere. However, "doing fairly crude pattern-matching" and "quickly get bogged down" seems more like ordinary machine learning capabilities than an "extraordinary ability."